课程地址,同样免费,并且提供 jupyter notebook 测试环境。

1. Introduction

LangChain 开源项目,是 Harrison Chase 创建的一个基于 Python 和 JavaScript 搭建快速使用 LLM 的开发包。这门课主要来学习 LangChain 开发

2. Models, Prompts and Output Parsers

主要是 3 个组件:

- models: LLM 大语言模型

- prompts: 带有提升的输入

- Parsers: 解析 LLM 的输出

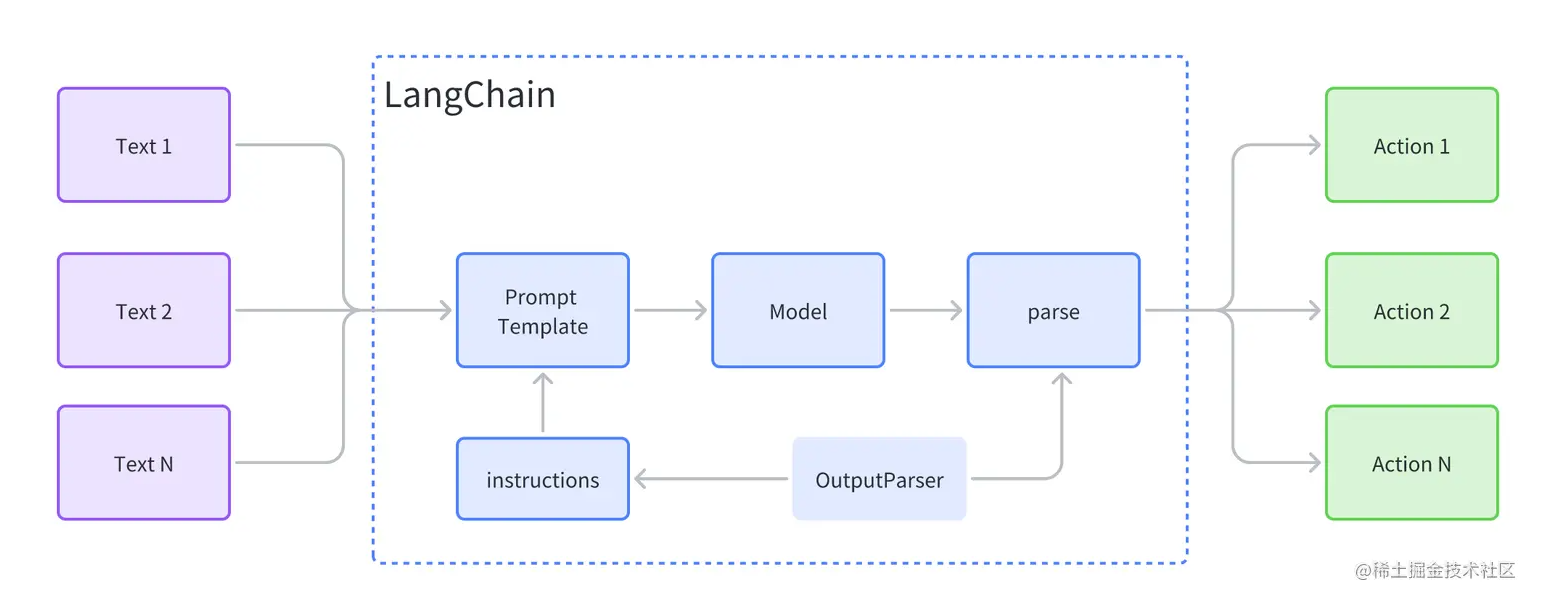

langchain 整体架构流程示意图,来源。

跟前面课程不一样的是使用 langchain.chat_models.langchain.chat_models 来实例化 GPT, 而不是 openai 包。

| from langchain.chat_models import langchain.chat_models | |

| chat = ChatOpenAI(temperature=0.0) | |

| chat |

chat 打印如下,这里就会出现可以设置的参数:

ChatOpenAI(verbose=False, callbacks=None, callback_manager=None, client=<class 'openai.api_resources.chat_completion.ChatCompletion'>, model_name='gpt-3.5-turbo', temperature=0.0, model_kwargs={}, openai_api_key=None, openai_api_base=None, openai_organization=None, request_timeout=None, max_retries=6, streaming=False, n=1, max_tokens=None)

我们可以设置 model_name 和 temperature。

chat = ChatOpenAI(model_name='gpt-3.5-turbo', temperature=0.0) #0 表示随机性低 0.9 就非常高

Prompt template

ChatPromptTemplate 会将我们以前的简单应用转换为含有输入变量的的模板。

| from langchain.prompts import ChatPromptTemplate | |

| prompt_template = ChatPromptTemplate.from_template(template_string) | |

| prompt_template.messages[0].prompt |

打印的信息显示有两个变量, 另外模板是怎么工作的:

PromptTemplate(input_variables=['style', 'text'], output_parser=None, partial_variables={}, template='Translate the text that is delimited by triple backticks into a style that is {style}. text: ```{text}```\n', template_format='f-string', validate_template=True)

这样我们就可以构建含有变量的模板:

| customer_messages = prompt_template.format_messages( | |

| style=customer_style, | |

| text=customer_email) | |

| customer_response = chat(customer_messages) |

发现一个介绍比较好的博文 使用 LangChain 开发 LLM 应用(2):模型, 提示词以及数据解析。可以直接去看他的,这就记录下问题和总结吧。

langchain 对对 prompt 进行模版化,输入 LLM,得到的输出用 output_parsers 中方法来解析构成 format_instructions 输出指定格式的信息,如 json。

| import os | |

| import openai | |

| from langchain.chat_models import ChatOpenAI | |

| from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate | |

| customer_review = """ | |

| MacBook Pro 特别棒!特别喜欢!m2 处理器性能超强,就是价钱有点小贵!电池续航逆天!不发热!还带有黑科技触控栏!现在 Mac 软件还算蛮多的,常用的办公软件都能有!用来日常办公完全没问题!我想重点点评一下他的音频接口!这代 MacBook Pro 带有先进的高阻抗耳机支持功能!同样的耳机,插 MacBook Pro 上,效果要好于 iPhone!还有它的录音性能!插上一根简单的转接头后,在配合电容麦,还有库乐队软件,录音效果逆天!真的特别棒!我有比较老版本的 Mac,但这代 MacBook Pro 的录音效果,真的比以前的 Mac 效果要好好多倍!特别逆天!适合音乐人!(个人感觉,不插电源,录音效果似乎会更好!)""" | |

| # Prompt 编写 | |

| review_template = """\ | |

| For the following text, extract the following information: | |

| gift: Was the item purchased as a gift for someone else? \ | |

| Answer True if yes, False if not or unknown. | |

| delivery_days: How many days did it take for the product \ | |

| to arrive? If this information is not found, output -1. | |

| price_value: Extract any sentences about the value or price,\ | |

| and output them as a comma separated Python list. | |

| Format the output as JSON with the following keys: | |

| gift | |

| delivery_days | |

| price_value | |

| text: {text} | |

| """os.environ['OPENAI_API_KEY'] ="sk-"# openai.proxy = {"http":"socks5://127.0.0.1:1080","https":"socks5://127.0.0.1:1080"} | |

| prompt_template = ChatPromptTemplate.from_template(review_template) | |

| messages = prompt_template.format_messages(text=customer_review) | |

| print(messages) | |

| chat = ChatOpenAI(temperature=0.0) | |

| respose = chat(messages) | |

| print(respose.content) | |

| from langchain.output_parsers import ResponseSchema | |

| from langchain.output_parsers import StructuredOutputParser | |

| gift_schema = ResponseSchema(name='gift', | |

| description='Was the item purchased as' | |

| 'a gift for someone else?' | |

| 'answer True if yes, False if not or unknown.' | |

| ) | |

| delivery_days_schema = ResponseSchema(name="delivery_days", | |

| description="How many days did it take for" | |

| "the product to arrive? If this" | |

| "information is not found, output -1.") | |

| price_value_schema = ResponseSchema(name="price_value", | |

| description="Extract any sentences about the" | |

| "value or price, and output them" | |

| "as a comma separated Python list.") | |

| respose_schemas = [gift_schema, | |

| delivery_days_schema, | |

| price_value_schema] | |

| output_parser = StructuredOutputParser.from_response_schemas(respose_schemas) | |

| format_instructions = output_parser.get_format_instructions() | |

| print(format_instructions) |

也可以结合 format_instructions 在一起构成prompt:

| review_template_2 = """\ | |

| For the following text, extract the following information: | |

| gift: Was the item purchased as a gift for someone else? \ | |

| Answer True if yes, False if not or unknown. | |

| delivery_days: How many days did it take for the product\ | |

| to arrive? If this information is not found, output -1. | |

| price_value: Extract any sentences about the value or price,\ | |

| and output them as a comma separated Python list. | |

| text: {text} | |

| {format_instructions} | |

| """ | |

| human_prompt_template = HumanMessagePromptTemplate.from_template(review_template_2) | |

| prompt = ChatPromptTemplate.from_messages([human_prompt_template]) | |

| messages = prompt.format_messages(text=customer_review, format_instructions=format_instructions) | |

| print(messages[0].content) |

3. Memory

主要讲解 LangChain 中的以下几种 memory 方式:

- ConversationBufferMemory:对话内容缓存,方便存储对话内容;

- ConversationBufferWindowMemory:根据对话的次数来控制记忆长度,k 值来设定

- ConversationTokenBufferMemory:根据 Token 数量来控制记忆长度;

- ConversationSummaryMemory:总结对话内容来减少 token 开销,降低成本和有利于更长的会话。

ConversationBufferMemory

| import os | |

| os.environ['OPENAI_API_KEY'] = "sk-" | |

| from langchain.chat_models import ChatOpenAI | |

| from langchain.chains import ConversationChain | |

| from langchain.memory import ConversationBufferMemory | |

| llm = ChatOpenAI(temperature=0.0) | |

| memory = ConversationBufferMemory() | |

| conversation = ConversationChain( | |

| llm=llm, | |

| memory = memory, | |

| verbose=True #开启 langchain 内部怎么构建 prompt | |

| ) | |

| llm = ChatOpenAI(temperature=0.0) | |

| memory = ConversationBufferMemory() | |

| conversation = ConversationChain(llm=llm, memory=memory, verbose=True) | |

| #通过 predict 开始对话 | |

| conversation.predict(input="Hi, my name is Andrew") | |

| #memory.buffer 保存的历史对话 | |

| print(memory.buffer) | |

| #保存的是 {'history':} 以 history 为键的字典 | |

| print(memory.load_memory_variables({})) |

ConversationBufferWindowMemory

| memory = ConversationBufferWindowMemory(k=2) #保存两次对话 | |

| #自定义保存 memory | |

| memory.save_context({"input": "Hi"}, | |

| {"output": "What's up"}) | |

| memory.save_context({"input": "Not much, just hanging"}, | |

| {"output": "Cool"}) | |

| #现在你打印 memory 就是上面这两次对话内容 | |

| #k 换成 1 就只有最近一次 | |

| memory.load_memory_variables({}) |

ConversationTokenBufferMemory

| memory = ConversationTokenBufferMemory(llm=llm, max_token_limit=30) | |

| #max_token_limit 限制只能保存最近 30 个 token | |

| memory.save_context({"input": "AI is what?!"}, | |

| {"output": "Amazing!"}) | |

| memory.save_context({"input": "Backpropagation is what?"}, | |

| {"output": "Beautiful!"}) | |

| memory.save_context({"input": "Chatbots are what?"}, | |

| {"output": "Charming!"}) | |

| memory.load_memory_variables({}) | |

| #只有从 Beautiful! 开始的对话 |

ConversationSummaryMemory

| from langchain.memory import ConversationSummaryBufferMemory | |

| # create a long string | |

| schedule = "There is a meeting at 8am with your product team. \ | |

| You will need your powerpoint presentation prepared. \ | |

| 9am-12pm have time to work on your LangChain \ | |

| project which will go quickly because Langchain is such a powerful tool. \ | |

| At Noon, lunch at the italian resturant with a customer who is driving \ | |

| from over an hour away to meet you to understand the latest in AI. \ | |

| Be sure to bring your laptop to show the latest LLM demo." | |

| #会对对话进行总结,并且限制在 100token 内 | |

| memory = ConversationSummaryBufferMemory(llm=llm, max_token_limit=100) | |

| memory.save_context({"input": "Hello"}, {"output": "What's up"}) | |

| memory.save_context({"input": "Not much, just hanging"}, | |

| {"output": "Cool"}) | |

| memory.save_context({"input": "What is on the schedule today?"}, | |

| {"output": f"{schedule}"}) | |

| memory.load_memory_variables({}) |

4. Chains

Chains——官方文档,允许我们将多个组件结合在一起,创建一个统一的应用程序。例如,我们可以创建一个 Chains,接受用户输入,使用 PromptTemplate 对其进行格式化,然后将格式化后的响应传递给一个 LLM。我们可以通过将多个 Chains 组合在一起或将 Chains 与其他组件结合来构建更复杂的 Chains。

LLMChain使用:

| from langchain.chat_models import ChatOpenAI | |

| from langchain.prompts import ChatPromptTemplate | |

| from langchain.chains import LLMChain | |

| llm = ChatOpenAI(temperature=0.9) | |

| prompt = ChatPromptTemplate.from_template( | |

| "What is the best name to describe \ | |

| a company that makes {product}?" | |

| ) | |

| chain = LLMChain(llm=llm, prompt=prompt) | |

| product = "Queen Size Sheet Set" | |

| chain.run(product) | |

| product = "Shoes of woman, should be beautiful." | |

| chain.run(product) |

SequentialChain允许 连接多个 Chains并将它们组合成执行某个特定场景的管道。顺序链条有两种类型:

- SimpleSequentialChain:最简单的 SequentialChain 形式,每个步骤都具有单一的输入 / 输出,一个步骤的输出是下一个步骤的输入。

- SequentialChain:更通用的 SequentialChain 形式,允许多个输入 / 输出。

SimpleSequentialChain 将多个 chain 组成 chains, 只有单一输入输出,并且 product 对应的 prompt1 输出 company_name 是 prompt2 的输入{company_name}。

| from langchain.chains import SimpleSequentialChain | |

| llm = ChatOpenAI(temperature=0.9) | |

| first_prompt = ChatPromptTemplate.from_template( | |

| "What is the best name to describe \ | |

| a company that makes {product}?" | |

| ) | |

| # Chain 1 | |

| product = "Shoes of woman, should be beautiful." | |

| chain_one = LLMChain(llm=llm, prompt=first_prompt) | |

| prompt1 = ChatPromptTemplate.from_template("What is the best name to describe a company that makes {product}" | |

| ) | |

| chain1 = LLMChain(llm=llm, prompt=prompt1) | |

| prompt2 = ChatPromptTemplate.from_template( | |

| "Write a 20 words description for the following \ | |

| company:{company_name}" | |

| ) | |

| chain2 = LLMChain(llm=llm, prompt=prompt2) | |

| all_chains = SimpleSequentialChain(chains=[chain1, chain2], | |

| verbose=True | |

| ) | |

| all_chains.run(product) |

SequentialChain相对 SimpleSequentialChain 更强大一点,要指定 input_variables/output_variables, 并且你看 prompt4 是可以输入多个变量的。

| from langchain.chains import SequentialChain | |

| llm = ChatOpenAI(temperature=0.9) | |

| prompt1 = ChatPromptTemplate.from_template( | |

| "Translate the following review to english:" | |

| "\n\n{Review}") | |

| chain1 = LLMChain(llm=llm, prompt=prompt1, | |

| output_key="English_Review" | |

| ) | |

| prompt2 = ChatPromptTemplate.from_template( | |

| "Can you summarize the following review in 1 sentence:" | |

| "\n\n{English_Review}" | |

| ) | |

| chain2 = LLMChain(llm=llm, prompt=prompt2, | |

| output_key="summary" | |

| ) | |

| prompt3 = ChatPromptTemplate.from_template("What language is the following review:\n\n{Review}" | |

| ) | |

| chain3 = LLMChain(llm=llm, prompt=prompt3, | |

| output_key="language" | |

| ) | |

| prompt4 = ChatPromptTemplate.from_template( | |

| "Write a follow up response to the following" | |

| "summary in the specified language:" | |

| "\n\nSummary: {summary}\n\nLanguage: {language}" | |

| ) | |

| chain4 = LLMChain(llm=llm, prompt=prompt4, | |

| output_key="followup_message" | |

| ) | |

| all_chains = SequentialChain(chains=[chain1, chain2, chain3, chain4], | |

| input_variables=["Review"], | |

| output_variables=["English_Review", | |

| "summary", | |

| "followup_message"], | |

| verbose=True | |

| ) | |

| review = df.Review[4] | |

| all_chains(review) | |

| ######################output################ | |

| > Entering new SequentialChain chain... | |

| > Finished chain. | |

| {'Review': "\xa0I loved this product. But they only seem to last a few months. The company was great replacing the first one (the frother falls out of the handle and can't be fixed). The after 4 months my second one did the same. I only use the frother for coffee once a day. It's not overuse or abuse. I'm very disappointed and will look for another. As I understand they will only replace once. Anyway, if you have one good luck.",'English_Review':'"I loved this product. However, it only seems to last a few months. The company was great at replacing the first one (the frother falls out of the handle and can\'t be fixed). But after four months, my second one did the same. I only use the frother for coffee once a day, so it\'s not overuse or abuse. I\'m very disappointed and will look for another one. As I understand it, they will only replace it once. Anyway, if you have one, good luck."', | |

| 'summary': 'The reviewer enjoyed the product but found that it only lasted a few months and was disappointed that the company would only replace it once.', | |

| 'followup_message': "Thank you for taking the time to review our product. We are glad to hear that you enjoyed it. However, we apologize for the disappointment you experienced with its lifespan. We do offer a replacement for defective products, but understand your frustration with only being able to receive it once. We value your feedback and will take it into consideration as we continue to improve our products and customer service. Please don't hesitate to reach out if there is anything else we can do to assist you."} |

Router Chain

Router chains 由两个组件组成:

- RouterChain(路由链)本身负责选择下一个要调用的 chain。

- destination_chains(目标链):RouterChain 可以路由到的 chain。

主要是 RouterChain 在 MultiPromptChain 中的应用,以创建一个问答 chain。该 chain 会选择对于给定问题最相关的提示,并使用该提示回答问题。这里先看看官方文档的 LLMChain 例子,LLMChain 文档。

| from langchain.llms import OpenAI | |

| from langchain.prompts import PromptTemplate | |

| from langchain.chains import ConversationChain | |

| from langchain.chains.llm import LLMChain | |

| from langchain.chains.router import MultiPromptChain | |

| physics_template = """You are a very smart physics professor. \ | |

| You are great at answering questions about physics in a concise and easy to understand manner. \ | |

| When you don't know the answer to a question you admit that you don't know. | |

| Here is a question: | |

| {input}"""math_template ="""You are a very good mathematician. You are great at answering math questions. \ | |

| You are so good because you are able to break down hard problems into their component parts, \ | |

| answer the component parts, and then put them together to answer the broader question. | |

| Here is a question: | |

| {input}""" | |

| prompt_infos = [ | |

| { | |

| "name": "physics", | |

| "description": "Good for answering questions about physics", | |

| "prompt_template": physics_template, | |

| }, | |

| { | |

| "name": "math", | |

| "description": "Good for answering math questions", | |

| "prompt_template": math_template, | |

| }, | |

| ] | |

| llm = OpenAI(temperature=0) | |

| destination_chains = {} | |

| for p_info in prompt_infos: | |

| name = p_info["name"] | |

| prompt_template = p_info["prompt_template"] | |

| prompt = PromptTemplate(template=prompt_template, input_variables=["input"]) | |

| chain = LLMChain(llm=llm, prompt=prompt) | |

| destination_chains[name] = chain | |

| default_chain = ConversationChain(llm=llm, output_key="text") | |

| default_chain.run("Where is the bound of universe?") | |

| ################################################################################## | |

| "That's a great question! Unfortunately, I don't know the answer. Scientists are still trying to figure out the boundaries of the universe." | |

| ################################################################################## | |

| default_chain.run("How to calculate the center of gravity of the triangle?") | |

| ################################################################################## | |

| 'Calculating the center of gravity of a triangle is a relatively simple process. You can use the formula x = (a+b+c)/3, where a, b, and c are the x-coordinates of the three vertices of the triangle.' | |

| ################################################################################## | |

| default_chain.run("How to calculate gravity?") | |

| ################################################################################## | |

| "Gravity is a force of attraction between two objects that is proportional to their masses. The gravitational force between two objects can be calculated using Newton's law of universal gravitation, which states that the force of gravity between two objects is equal to the product of their masses divided by the square of the distance between them." |

LLMRouterChain 中使用 MultiPromptChain, 自动 router 到回答 template。

| from langchain.chains.router.llm_router import LLMRouterChain, RouterOutputParser | |

| from langchain.chains.router.multi_prompt_prompt import MULTI_PROMPT_ROUTER_TEMPLATE | |

| destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos] | |

| destinations_str = "\n".join(destinations) | |

| router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str) | |

| router_prompt = PromptTemplate( | |

| template=router_template, | |

| input_variables=["input"], | |

| output_parser=RouterOutputParser(),) | |

| router_chain = LLMRouterChain.from_llm(llm, router_prompt) | |

| chain = MultiPromptChain( | |

| router_chain=router_chain, | |

| destination_chains=destination_chains, | |

| default_chain=default_chain, | |

| verbose=True, | |

| ) | |

| chain.run("How to calculate gravity?") | |

| chain.run("How to calculate the center of gravity of the triangle?") | |

参考

[1] 使用 LangChain 开发 LLM 应用(3):记忆(Memory)