1. Q&A over Documents

先看一个例子,对于 OutdoorClothingCatalog_1000.csvCSV 文档,我们用 VectorstoreIndexCreator 构建向量索引后就能查询对应的内容了。这是怎么实现的呢?

| from langchain.chains import RetrievalQA | |

| from langchain.chat_models import ChatOpenAI | |

| from langchain.document_loaders import CSVLoader | |

| from langchain.vectorstores import DocArrayInMemorySearch | |

| from IPython.display import display, Markdown | |

| from langchain.indexes import VectorstoreIndexCreator | |

| #1. 导入 csv | |

| file = 'OutdoorClothingCatalog_1000.csv' | |

| loader = CSVLoader(file_path=file) | |

| #2. 构建关于文档的向量索引 | |

| index = VectorstoreIndexCreator(vectorstore_cls=DocArrayInMemorySearch)\ | |

| .from_loaders([loader]) | |

| query ="Please list all your shirts with sun protection \ | |

| in a table in markdown and summarize each one." | |

| respose = index.query(query) | |

| display(Markdown(response)) |

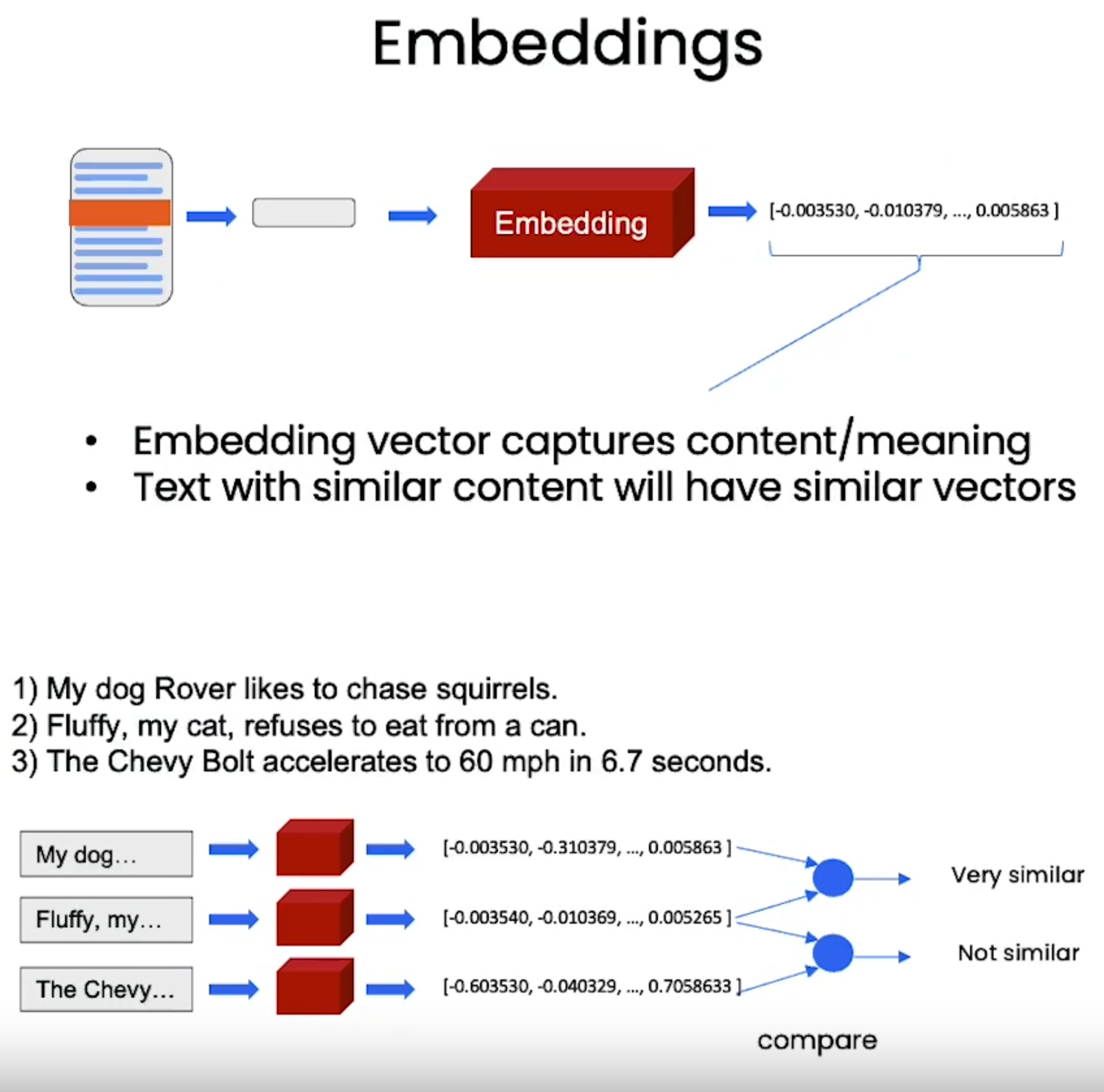

其实对于文档而言经过 LLM 编码后就是一个向量,然后我们通过返回与 query 自身向量相近的向量 top- k 个向量来实现这个查询功能。

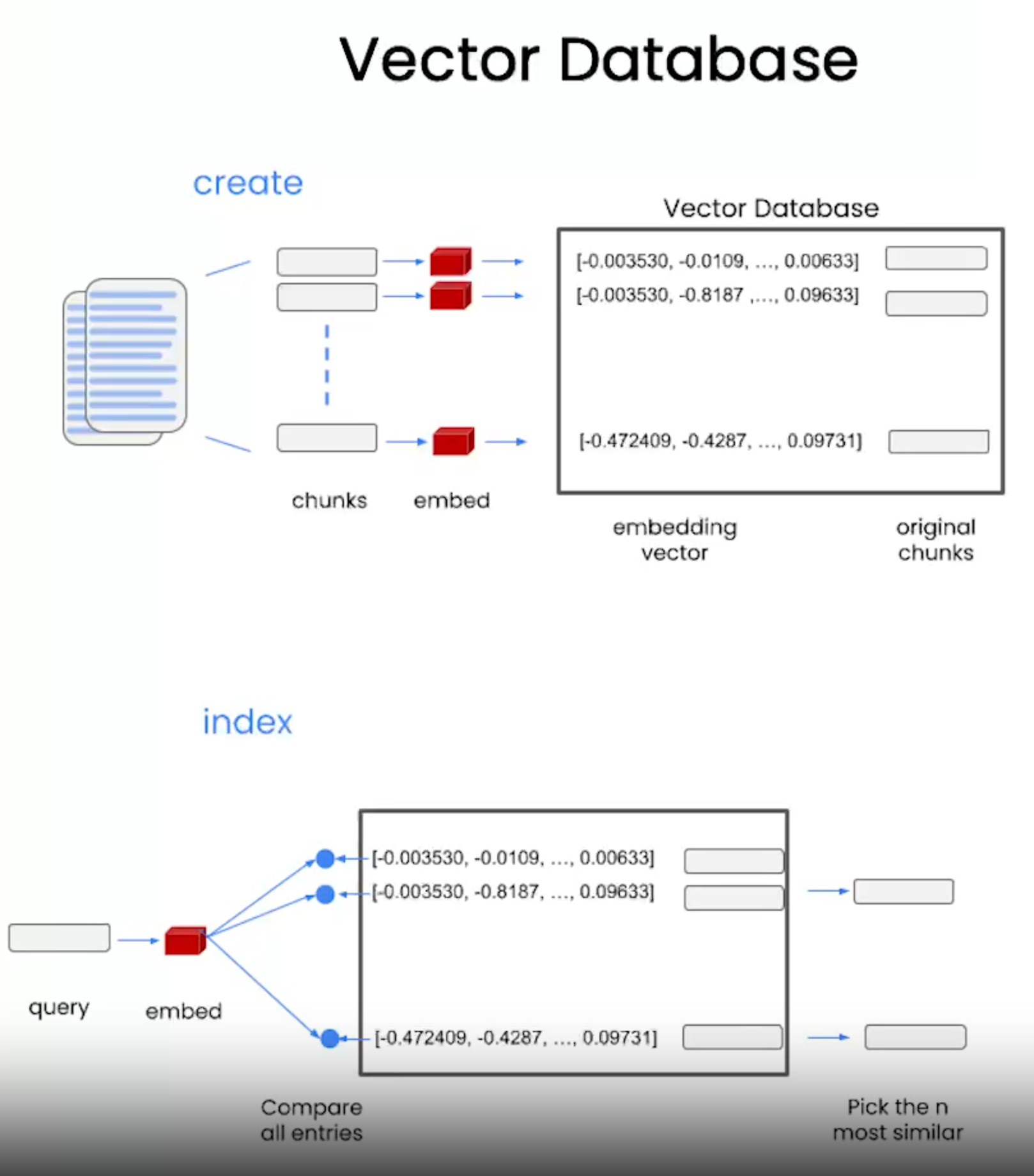

但是一般而言文档都是很大的,或者说我们有很多类似的文档。这时需要分割成小的 chunks,再经过 LLM 得到对应的 embedding 向量。然后去查找与 query 向量相近的 top- k 个向量,这样就找到了相近的 chunks。这时,返回这些 chunks 就好了。

有了向量数据库,怎么使用呢?对于文档向量数据库的使用方法有下面几种:

- 直接用 index 来查询,

| from langchain.embeddings import OpenAIEmbeddings | |

| embeddings = OpenAIEmbeddings() | |

| file = 'OutdoorClothingCatalog_1000.csv' | |

| loader = CSVLoader(file_path=file) | |

| docs = loader.load() | |

| llm = ChatOpenAI(temperature = 0.0) | |

| index = VectorstoreIndexCreator(vectorstore_cls=DocArrayInMemorySearch)\ | |

| .from_loaders([loader]) | |

| response = index.query(query, llm=llm) |

db.similarity_search(query):直接使用 similarity_search 搜索

| db = DocArrayInMemorySearch.from_documents( | |

| docs, | |

| embeddings | |

| ) | |

| query = "Please suggest a shirt with sunblocking" | |

| docs = db.similarity_search(query) | |

| print(docs) |

- db 作为检索器

| retriever = db.as_retriever() | |

| qdocs = "".join([docs[i].page_content for i in range(len(docs))]) | |

| response = llm.call_as_llm(f"{qdocs} Question: Please list all your \ | |

| shirts with sun protection in a table in markdown and summarize each one.") |

RetrievalQA使用 chain 来搜索答案

| qa_stuff = RetrievalQA.from_chain_type( | |

| llm=llm, | |

| chain_type="stuff", | |

| retriever=retriever, | |

| verbose=True | |

| ) | |

| response = qa_stuff.run(query) |

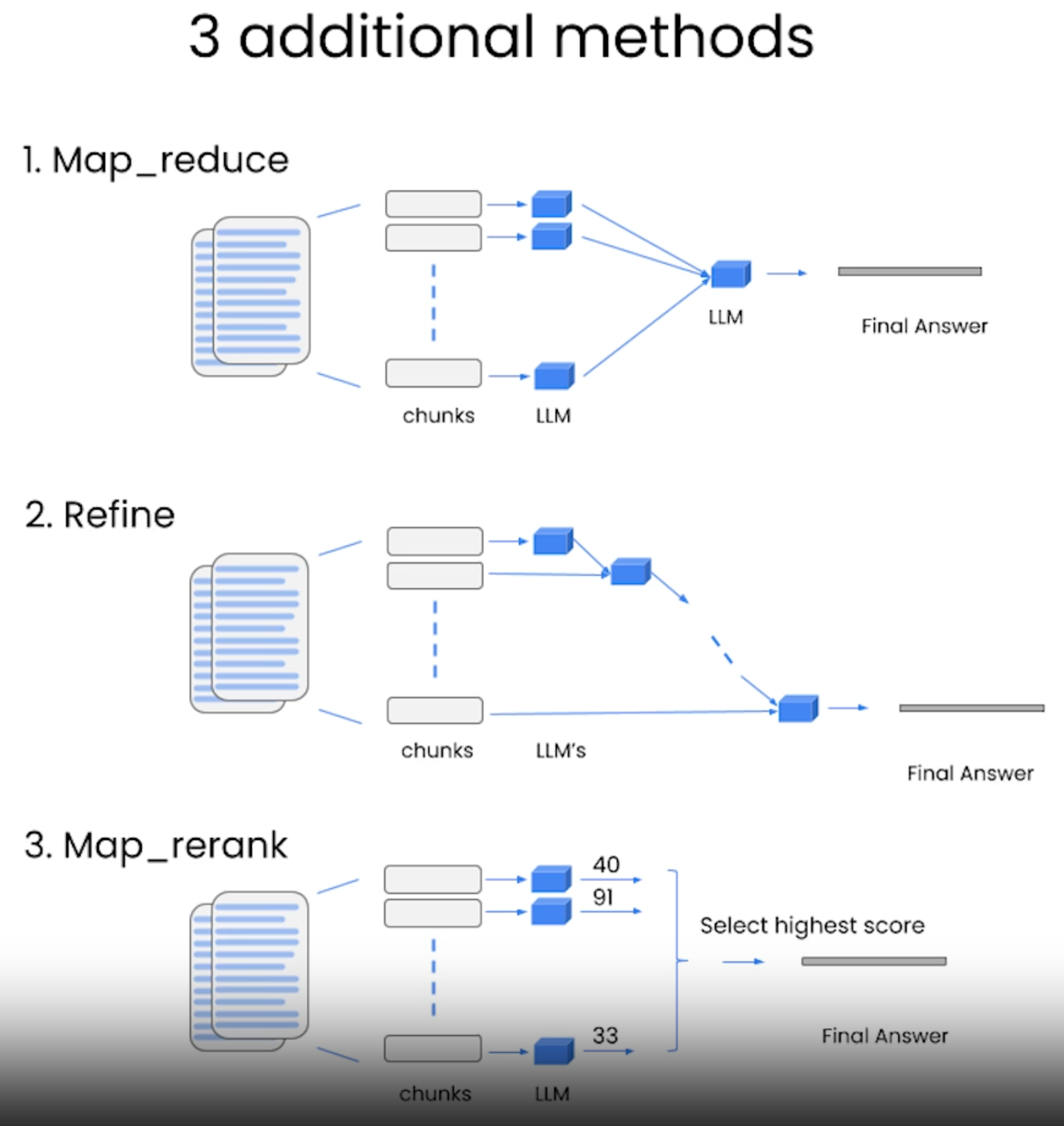

还有其它方法, 查看 。

2. Evaluation

langchain.debug = True检查 qa 过程是否正确QAEvalChain验证生成答案和实际答案是否一致。

| from langchain.evaluation.qa import QAEvalChain | |

| llm = ChatOpenAI(temperature=0) | |

| eval_chain = QAEvalChain.from_llm(llm) | |

| graded_outputs = eval_chain.evaluate(examples, predictions) | |

| for i, eg in enumerate(examples): | |

| print(f"Example {i}:") | |

| print("Question:" + predictions[i]['query']) | |

| print("Real Answer:" + predictions[i]['answer']) | |

| print("Predicted Answer:" + predictions[i]['result']) | |

| print("Predicted Grade:" + graded_outputs[i]['text']) | |

| print() |

3. Agents

一般步骤: 具体查看 agent 官方文档 。

- 实例化 agent 和 tools 加载哪些领域知识

- 初始化 agent

- agent.run(question),来回答问题。

| from langchain.agents.agent_toolkits import create_python_agent | |

| from langchain.agents import load_tools, initialize_agent | |

| from langchain.agents import AgentType | |

| from langchain.tools.python.tool import PythonREPLTool | |

| from langchain.chat_models import ChatOpenAI | |

| llm = ChatOpenAI(temperature=0) | |

| tools = load_tools(["wikipedia", "llm-math"], llm=llm) | |

| agent = initialize_agent(tools, llm, | |

| agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, | |

| verbose=True) | |

| agent.run("Which country is in the northest?") | |

| #agent.run("How to solve the center gravity of a triangle?") |

create_python_agent 构建自己的机器人助手,比如对顾客姓名排序。

| agent = create_python_agent( | |

| llm, | |

| tool=PythonREPLTool(), | |

| verbose=True | |

| ) | |

| customer_list = [["Harrison", "Chase"], | |

| ["Lang", "Chain"], | |

| ["Dolly", "Too"], | |

| ["Elle", "Elem"], | |

| ["Geoff","Fusion"], | |

| ["Trance","Former"], | |

| ["Jen","Ayai"] | |

| ] | |

| agent.run(f"""Sort these customers by \ | |

| last name and then first name \ | |

| and print the output: {customer_list}""") |

@tool 装饰器可以构建自己的工具如:

| from langchain.agents import tool | |

| from datetime import date | |

| @tool | |

| def time(text: str) -> str: | |

| """Returns todays date, use this for any \ | |

| questions related to knowing todays date. \ | |

| The input should always be an empty string, \ | |

| and this function will always return todays \ | |

| date - any date mathmatics should occur \ | |

| outside this function.""" | |

| return str(date.today()) | |

| agent= initialize_agent(tools + [time], | |

| llm, | |

| agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION, | |

| handle_parsing_errors=True, | |

| verbose = True) |

本门课程主要讲解了 langchain 的使用,主要包括:

- Models, Prompts and parsers

- Memory

- Chains

- QA

- Evaluation

- Agents

正文完