1. 介绍

Gemini 是谷歌 DeepMind 研发的一系列大型语言模型,目前有三个版本:

- Gemini Ultra: 最强大的一款,在多任务语言理解 (MMLU) 测试中得分 90%,首次超越了人类专家水平,擅长复杂任务。

- Gemini Pro: 目前免费开放使用的版本,能力与 GPT-3.5 相当,擅长广泛任务。

- Gemini Nano: 轻量级版本,用于 Pixel 8 Pro 等设备上。

详细介绍去官网 查看。这里使用 Gemini pro 模型来构建多文档的问答应用。因为需要用到 Gemini api,可以在这里申请。

跟上一篇一样,还是解析 pdf、切分、embedding、搜索 topk、大模型根据上下文和问题生成答案。

由于 Gemini 中文效果非常差,尽量不要用中文的 pdf。这里选用 llama2 的论文来测试。

2. 代码

import os

import streamlit as st

from PyPDF2 import PdfReader

from langchain.prompts import PromptTemplate

from langchain.chains.question_answering import load_qa_chain

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import FAISS

import google.generativeai as genai

from langchain_google_genai import (GoogleGenerativeAIEmbeddings,

ChatGoogleGenerativeAI)

os.environ["GOOGLE_API_KEY"] = "xxxx"

# genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

# 设置 SOCKS5 代理

# os.environ['HTTP_PROXY'] = 'https://127.0.0.1:1081'

# os.environ['HTTPS_PROXY'] = 'https://127.0.0.1:1081'

def get_pdf_text(pds_dir):

text = ""

for pdf in pds_dir:

pdf_reader = PdfReader(pdf)

for page in pdf_reader.pages:

text += page.extract_text()

return text

def get_text_chucks(text, chunk_size=1024, chunk_overlap=100):

text_splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size,

chunk_overlap=chunk_overlap

)

chunks = text_splitter.split_text(text)

return chunks

def user_input(user_question, chunks):

embeddings = GoogleGenerativeAIEmbeddings(model="models/embedding-001")

vector_store = FAISS.from_texts(chunks, embedding=embeddings)

docs = vector_store.similarity_search(user_question, k=2)

#print(f"相关文档有:", docs)

prompt_template = """

Answer the question as detailed as possible from the provided context, make sure to provide all the details, if the answer is not in

provided context just say, "answer is not available in the context", don't provide the wrong answer\n\n

Context:\n {context}?\n

Question: \n{question}\n

Answer:

"""model = ChatGoogleGenerativeAI(model="gemini-pro",

temperature=0.3)

prompt = PromptTemplate(template=prompt_template, input_variables=["context", "question"])

chain = load_qa_chain(llm=model, chain_type="stuff", prompt=prompt)

response = chain.run(input_documents=docs,

question=user_question,

return_only_outputs=True

)

print(response)

return response

def find_pdf_files(directory):

import glob

pdf_files = glob.glob(os.path.join(directory, '*.pdf'))

return pdf_files

def main():

st.set_page_config("文档问答")

st.header("基于 Gemini 的知识问答")

if "chunks" not in st.session_state:

st.session_state.chunks = None # 初始化 chunks

with st.sidebar:

st.title("目录:")

pdf_docs = st.file_uploader("上传 pdf 文档", accept_multiple_files=True)

if st.button("上传"):

with st.spinner("处理中..."):

texts = get_pdf_text(pdf_docs)

st.session_state.chunks = get_text_chucks(texts, 4000, 500)

st.write("转换为 embedding 完成!")

user_question = st.text_input("请输入文档相关的问题,按 Enter 提交:")

if user_question.lower() == "qq":

st.write("已退出。")

elif user_question and st.session_state.chunks is not None:

with st.spinner("处理中..."):

response = user_input(user_question, st.session_state.chunks)

st.write(response)

if __name__ == '__main__':

main()

# streamlit run app.py --server.port=601493. 对 llama 进行提问来测试效果

这里准备了 10 个根据文档都能问题来测试:

| 1 | What kind of optimizer was used in the training of Llama 2? |

| 2 | What are the hyperparameters used for the AdamW optimizer in the training of Llama 2? |

| 3 | What is the learning rate schedule applied in the training of the Llama 2 model? |

| 4 | Could you provide details about the training data parameters for Llama 2 and Llama 1? |

| 5 | What does the token count refer to in the training of the Llama 2 family of models? |

| 6 | What is the role of the Grouped-Query Attention (GQA) in the training of the Llama 2 model? |

| 7 | What percentage of the pretraining data for Llama 2 is in English? |

| 8 | What other languages are part of the pretraining data for Llama 2 and what percentage do they represent? |

| 9 | What contributes to the large unknown category in the language distribution of the pretraining data for Llama 2? |

| 10 | How might the language distribution of the pretraining data affect the performance of Llama 2? |

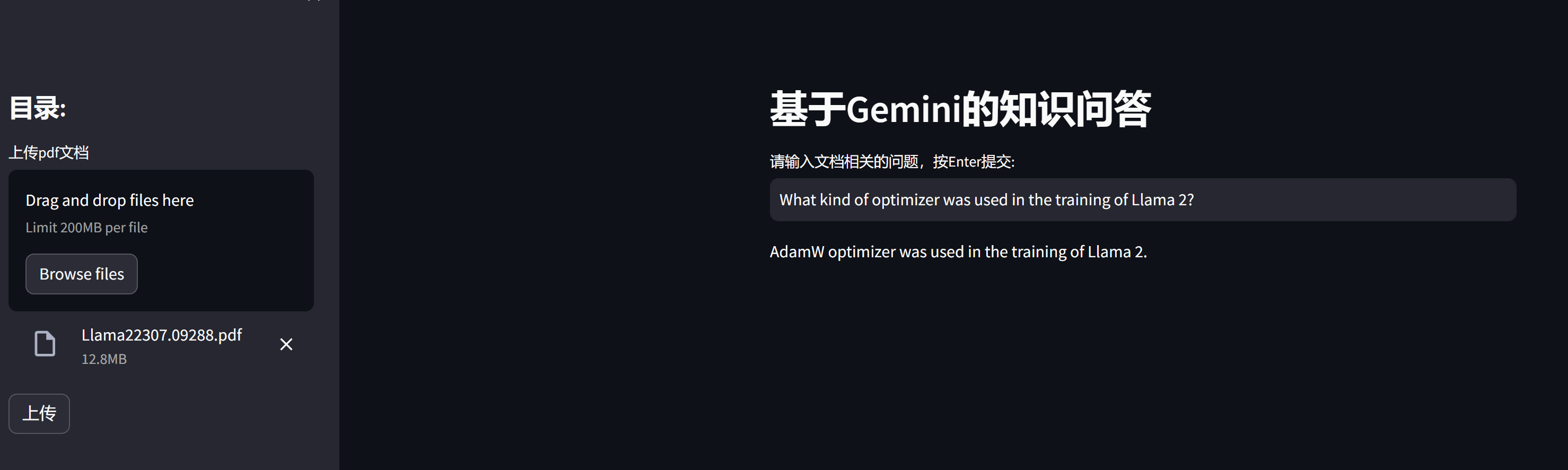

第一个问题测试如下:(记得点击上传)

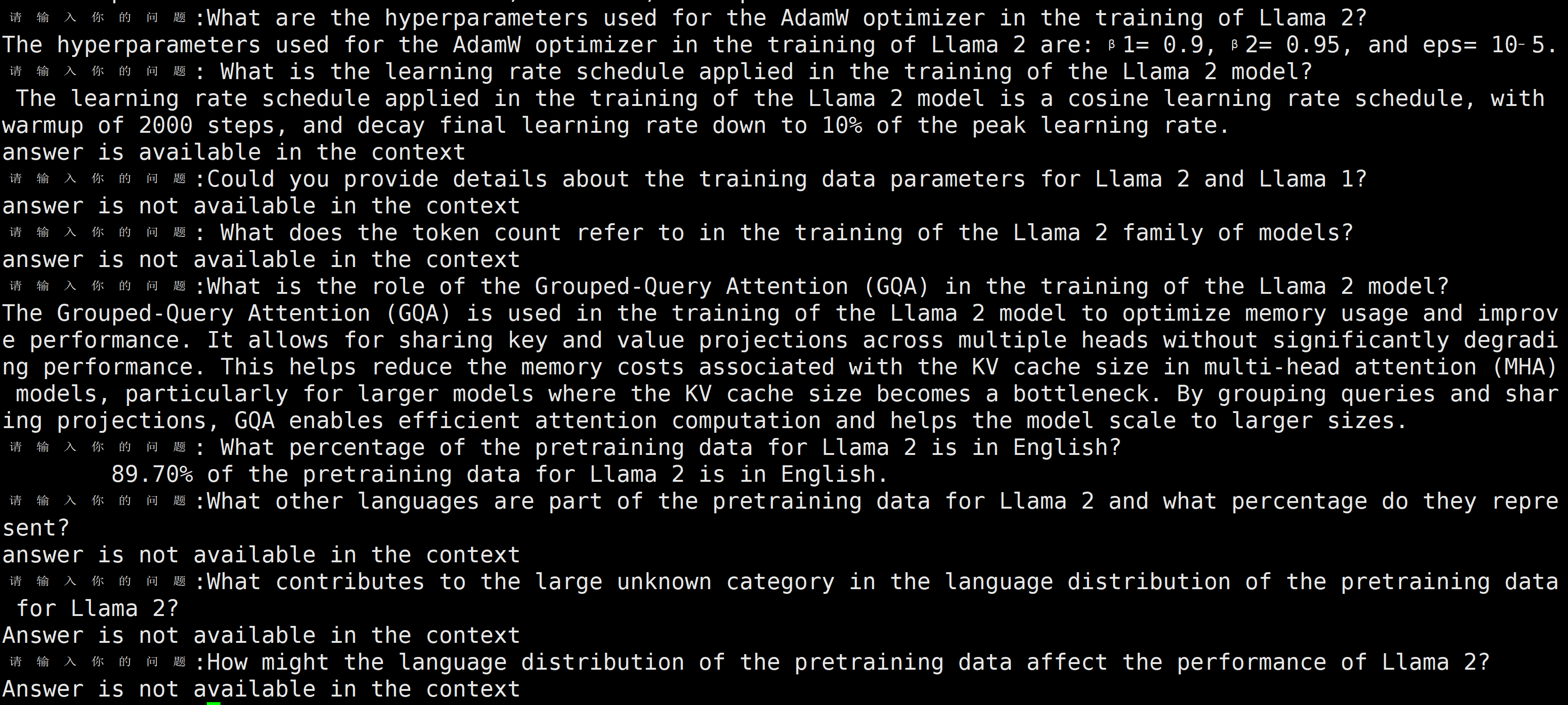

使用脚本测试 10 个问题测试结果如下:

所有问题和答案整理如下,总共回答了 5 个,其他都是answer is not available in the context。感觉非常差,我用 llama2 在本地测试是要好于 50% 的,gemini 对于找到的文档也常拒绝回答。中文就不要尝试了,大部分场景都不会使用中文。

| 1 | What kind of optimizer was used in the training of Llama 2? | AdamW optimizer was used in the training of Llama 2. |

| 2 | What are the hyperparameters used for the AdamW optimizer in the training of Llama 2? | The hyperparameters used for the AdamW optimizer in the training of Llama 2 are: β1= 0.9, β2= 0.95, and eps= 10^-5. |

| 3 | What is the learning rate schedule applied in the training of the Llama 2 model? | The learning rate schedule applied in the training of the Llama 2 model is a cosine learning rate schedule, with warmup of 2000 steps, and decay final learning rate down to 10% of the peak learning rate. |

| 4 | Could you provide details about the training data parameters for Llama 2 and Llama 1? | answer is not available in the context |

| 5 | What does the token count refer to in the training of the Llama 2 family of models? | answer is not available in the context |

| 6 | What is the role of the Grouped-Query Attention (GQA) in the training of the Llama 2 model? | The Grouped-Query Attention (GQA) is used in the training of the Llama 2 model to optimize memory usage and improve performance… |

| 7 | What percentage of the pretraining data for Llama 2 is in English? | 89.70% of the pretraining data for Llama 2 is in English. |

| 8 | What other languages are part of the pretraining data for Llama 2 and what percentage do they represent? | answer is not available in the context |

| 9 | What contributes to the large unknown category in the language distribution of the pretraining data for Llama 2? | Answer is not available in the context |

| 10 | How might the language distribution of the pretraining data affect the performance of Llama 2? | Answer is not available in the context |

环境配置:

正文完