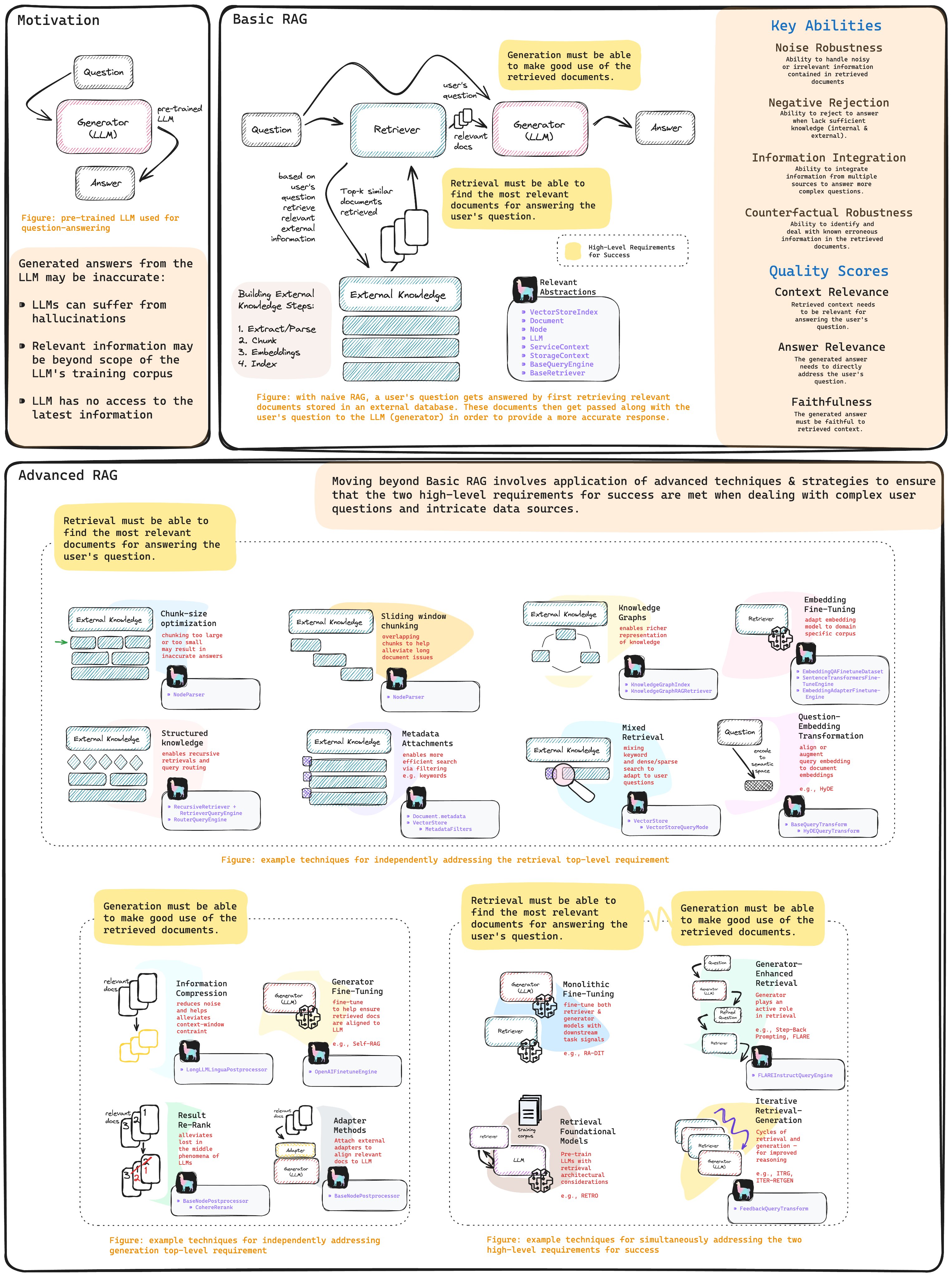

有关 RAG 最近最火的应该是这篇 A Cheat Sheet and Some Recipes For Building Advanced RAG。RAG 全部要点如图所示,原图:

图片就能看出含金量有多高了,那现在先来看看第一部分,就是上图 Advanced RAG 的 Chunk-size optimization 部分。

llama index 官方例子param_optimizer.ipynb.

个人实验,因为 api 限制就不跑完了。1.param_optimizer.ipynb。

1. 如何添加 node 信息

- 解析文档并获取 base_nodes

- 利用向量存储索引建立 base_nodes 的索引

- 本地持久化

| from pathlib import Path | |

| from llama_index.node_parser import SimpleNodeParser | |

| def _build_index(chunk_size, docs): | |

| index_out_path = f"./storage_{chunk_size}" | |

| if not os.path.exists(index_out_path): | |

| Path(index_out_path).mkdir(parents=True, exist_ok=True) | |

| # parse docs | |

| node_parser = SimpleNodeParser.from_defaults(chunk_size=chunk_size) | |

| base_nodes = node_parser.get_nodes_from_documents(docs) | |

| # build index | |

| index = VectorStoreIndex(base_nodes) | |

| # save index to disk | |

| index.storage_context.persist(index_out_path) | |

| else: | |

| # rebuild storage context | |

| storage_context = StorageContext.from_defaults(persist_dir=index_out_path) | |

| # load index | |

| index = load_index_from_storage(storage_context,) | |

| return index |

最主要的是get_nodes_from_documents, 将文档建立对应的的 node 关系,

| def get_nodes_from_documents( | |

| self, | |

| documents: Sequence[Document], | |

| show_progress: bool = False, | |

| **kwargs: Any, | |

| ) -> List[BaseNode]: | |

| """Parse documents into nodes. | |

| Args: | |

| documents (Sequence[Document]): documents to parse | |

| show_progress (bool): whether to show progress bar | |

| """ | |

| doc_id_to_document = {doc.id_: doc for doc in documents} | |

| with self.callback_manager.event(CBEventType.NODE_PARSING, payload={EventPayload.DOCUMENTS: documents} | |

| ) as event: | |

| nodes = self._parse_nodes(documents, show_progress=show_progress, **kwargs) | |

| for i, node in enumerate(nodes): | |

| if ( | |

| node.ref_doc_id is not None | |

| and node.ref_doc_id in doc_id_to_document | |

| ): | |

| ref_doc = doc_id_to_document[node.ref_doc_id] | |

| start_char_idx = ref_doc.text.find(node.get_content(metadata_mode=MetadataMode.NONE) | |

| ) | |

| # update start/end char idx | |

| if start_char_idx >= 0: | |

| node.start_char_idx = start_char_idx | |

| node.end_char_idx = start_char_idx + len(node.get_content(metadata_mode=MetadataMode.NONE) | |

| ) | |

| # update metadata | |

| if self.include_metadata: | |

| node.metadata.update(doc_id_to_document[node.ref_doc_id].metadata | |

| ) | |

| if self.include_prev_next_rel: | |

| if i > 0: | |

| node.relationships[NodeRelationship.PREVIOUS] = nodes[ | |

| i - 1 | |

| ].as_related_node_info() | |

| if i < len(nodes) - 1: | |

| node.relationships[NodeRelationship.NEXT] = nodes[ | |

| i + 1 | |

| ].as_related_node_info() | |

| event.on_end({EventPayload.NODES: nodes}) | |

| return nodes |

2. chunk size 参数优化

先建立一个优化目标函数,如 async 模式 的 aobjective_function 分析,主要流程:

- 获取参数

- 建立索引

- 查询

- 得到响应

- 评估

- 语义相似度的指标, 获取的查询结果和真实结果进行相似度计算。

| async def aobjective_function(params_dict): | |

| chunk_size = params_dict["chunk_size"] | |

| docs = params_dict["docs"] | |

| top_k = params_dict["top_k"] | |

| eval_qs = params_dict["eval_qs"] | |

| ref_response_strs = params_dict["ref_response_strs"] | |

| # build index | |

| index = _build_index(chunk_size, docs) | |

| # query engine | |

| query_engine = index.as_query_engine(similarity_top_k=top_k) | |

| # get predicted responses | |

| pred_response_objs = await aget_responses(eval_qs, query_engine, show_progress=True) | |

| # run evaluator | |

| # NOTE: can uncomment other evaluators | |

| eval_batch_runner = _get_eval_batch_runner() | |

| eval_results = await eval_batch_runner.aevaluate_responses(eval_qs, responses=pred_response_objs, reference=ref_response_strs) | |

| # get semantic similarity metric | |

| mean_score = np.array([r.score for r in eval_results["semantic_similarity"]] | |

| ).mean() | |

| return RunResult(score=mean_score, params=params_dict) |

接下来跟 AutoML 的参数优化一样,

- 选取要优化的参数值,这里是

{"chunk_size": [256, 512, 1024], "top_k": [1, 2, 5]} - 利用

ParamTuner对aobjective_function和参数调节获取最优的指标和对应的参数值。

最后能得到结果,Score: 0.9521222054806685 Top-k: 2 Chunk size: 512。

| param_dict = {"chunk_size": [256, 512, 1024], "top_k": [1, 2, 5]} | |

| # param_dict = {# "chunk_size": [256], | |

| # "top_k": [1] | |

| # } | |

| fixed_param_dict = { | |

| "docs": docs, | |

| "eval_qs": eval_qs[:10], | |

| "ref_response_strs": ref_response_strs[:10], | |

| } | |

| from llama_index.param_tuner import ParamTuner | |

| param_tuner = ParamTuner( | |

| param_fn=objective_function, | |

| param_dict=param_dict, | |

| fixed_param_dict=fixed_param_dict, | |

| show_progress=True, | |

| ) | |

| results = param_tuner.tune() |

个人认为实际应用中,难点在于:

- gold dataset 难以构建,覆盖的范围太小没什么用,太大了成本太高了。

- 参数搜索需要大量算力,时间成本也很高。一般没钱别玩。

正文完

2024-01-10