Qwen 和 Qwen-VL web 部署

1. 安装环境

项目开源地址:

这里不拉取整个仓库,只使用相应的 web_demo.py 来部署,主要是安装相关依赖:

- 安装所有环境需要的包

- 安装 flash-attention

- 安装 layer_norm 对相应的层加速,记得开启

MAX_JOBS= 可用 cpu 核心数来加速编译,不然半天都编译不完。 - 注意

gradio<3.42,不然返回 response 有问题。

| #1.pip 安装所有环境 | |

| pip install transformers==4.38.0 tiktoken accelerate deepspeed peft tokenizers optimum auto-gptq einops transformers_stream_generator==0.0.4 scipy gradio==3.41 mpi4py | |

| #2.flash-attention | |

| git clone https://github.com/Dao-AILab/flash-attention | |

| cd flash-attention && pip install . | |

| #3.layer_norm | |

| cd flash-attention/csrc/layer_norm &&MAX_JOBS=32 pip install . |

2. Qwen-7B 运行效果

python3 qwen_web_demo.py -c ./models/Qwen/Qwen-7B-Chat这样就可以在指定端口打开页面了。实际效果如下:

qwen_web_demo.py备用:

| # !/usr/bin/env python | |

| # -*-coding:utf-8 -*- | |

| from argparse import ArgumentParser | |

| import gradio as gr | |

| import mdtex2html | |

| import torch | |

| from transformers import AutoModelForCausalLM, AutoTokenizer | |

| from transformers.generation import GenerationConfig | |

| DEFAULT_CKPT_PATH = 'Qwen/Qwen-7B-Chat' | |

| def _get_args(): | |

| parser = ArgumentParser() | |

| parser.add_argument("-c", "--checkpoint-path", type=str, default=DEFAULT_CKPT_PATH, | |

| help="Checkpoint name or path, default to %(default)r") | |

| parser.add_argument("--cpu-only", action="store_true", help="Run demo with CPU only") | |

| parser.add_argument("--share", action="store_true", default=False, | |

| help="Create a publicly shareable link for the interface.") | |

| parser.add_argument("--inbrowser", action="store_true", default=False, | |

| help="Automatically launch the interface in a new tab on the default browser.") | |

| parser.add_argument("--server-port", type=int, default=6006, | |

| help="Demo server port.") | |

| parser.add_argument("--server-name", type=str, default="127.0.0.1", | |

| help="Demo server name.") | |

| args = parser.parse_args() | |

| return args | |

| def _load_model_tokenizer(args): | |

| tokenizer = AutoTokenizer.from_pretrained(args.checkpoint_path, trust_remote_code=True, resume_download=True,) | |

| if args.cpu_only: | |

| device_map = "cpu" | |

| else: | |

| device_map = "auto" | |

| model = AutoModelForCausalLM.from_pretrained( | |

| args.checkpoint_path, | |

| device_map=device_map, | |

| trust_remote_code=True, | |

| resume_download=True, | |

| ).eval() | |

| config = GenerationConfig.from_pretrained(args.checkpoint_path, trust_remote_code=True, resume_download=True,) | |

| return model, tokenizer, config | |

| def postprocess(self, y): | |

| #gr.Chatbot 对 message, response 进行 mdtex2html 预处理 | |

| if y is None: | |

| return [] | |

| for i, (message, response) in enumerate(y): | |

| y[i] = (None if message is None else mdtex2html.convert(message), | |

| None if response is None else mdtex2html.convert(response), | |

| ) | |

| return y | |

| gr.Chatbot.postprocess = postprocess | |

| def _parse_text(text): | |

| lines = text.split("\n") | |

| lines = [line for line in lines if line != ""] | |

| count = 0 | |

| for i, line in enumerate(lines): | |

| if "```" in line: | |

| count += 1 | |

| items = line.split("`") | |

| if count % 2 == 1: | |

| lines[i] = f'<pre><code class="language-{items[-1]}">' | |

| else: | |

| lines[i] = f"<br></code></pre>" | |

| else: | |

| if i > 0: | |

| if count % 2 == 1: | |

| line = line.replace("`", r"\`") | |

| line = line.replace("<", "<") | |

| line = line.replace(">", ">") | |

| line = line.replace(""," ") | |

| line = line.replace("*", "*") | |

| line = line.replace("_", "_") | |

| line = line.replace("-", "-") | |

| line = line.replace(".", ".") | |

| line = line.replace("!", "!") | |

| line = line.replace("(", "(") | |

| line = line.replace(")", ")") | |

| line = line.replace("$", "$") | |

| lines[i] = "<br>" + line | |

| text = "".join(lines) | |

| return text | |

| def _gc(): | |

| import gc | |

| gc.collect() | |

| if torch.cuda.is_available(): | |

| torch.cuda.empty_cache() | |

| def _launch_demo(args, model, tokenizer, config): | |

| def predict(_query, _chatbot, _task_history): | |

| print(f"User: {_parse_text(_query)}") | |

| _chatbot.append((_parse_text(_query), "")) | |

| full_response = "" | |

| for response in model.chat_stream(tokenizer, _query, history=_task_history, generation_config=config): | |

| _chatbot[-1] = (_parse_text(_query), _parse_text(response)) | |

| yield _chatbot | |

| full_response = _parse_text(response) | |

| print(f"History: {_task_history}") | |

| _task_history.append((_query, full_response)) | |

| print(f"Qwen-Chat: {_parse_text(full_response)}") | |

| def regenerate(_chatbot, _task_history): | |

| if not _task_history: | |

| yield _chatbot | |

| return | |

| #删除历史消息最后一个 | |

| item = _task_history.pop(-1) | |

| _chatbot.pop(-1) | |

| yield from predict(item[0], _chatbot, _task_history) | |

| def reset_user_input(): | |

| return gr.update(value="") | |

| def reset_state(_chatbot, _task_history): | |

| _task_history.clear() | |

| _chatbot.clear() | |

| _gc() | |

| return _chatbot | |

| with gr.Blocks() as demo: | |

| gr.Markdown("""\ | |

| <p align="center"><img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/logo_qwen.jpg" style="height: 80px"/><p>""") | |

| gr.Markdown("""<center><font size=8>Qwen-Chat Bot</center>""") | |

| gr.Markdown( | |

| """\ | |

| <center><font size=3>This WebUI is based on Qwen-Chat, developed by Alibaba Cloud. \ | |

| (本 WebUI 基于 Qwen-Chat 打造,实现聊天机器人功能。)</center>""") | |

| chatbot = gr.Chatbot(label='Qwen-Chat', elem_classes="control-height") | |

| query = gr.Textbox(lines=2, label='Input') | |

| task_history = gr.State([]) | |

| with gr.Row(): | |

| empty_btn = gr.Button("🧹 Clear History (清除历史)") | |

| submit_btn = gr.Button("🚀 Submit (发送)") | |

| regen_btn = gr.Button("🤔️ Regenerate (重试)") | |

| submit_btn.click(predict, [query, chatbot, task_history], [chatbot], show_progress=True) | |

| submit_btn.click(reset_user_input, [], [query]) | |

| empty_btn.click(reset_state, [chatbot, task_history], outputs=[chatbot], show_progress=True) | |

| regen_btn.click(regenerate, [chatbot, task_history], [chatbot], show_progress=True) | |

| gr.Markdown("""\ | |

| <font size=2>Note: This demo is governed by the original license of Qwen. \ | |

| We strongly advise users not to knowingly generate or allow others to knowingly generate harmful content, \ | |

| including hate speech, violence, pornography, deception, etc. \ | |

| (注:本演示受 Qwen 的许可协议限制。我们强烈建议,用户不应传播及不应允许他人传播以下内容,\ | |

| 包括但不限于仇恨言论、暴力、色情、欺诈相关的有害信息。)""") | |

| demo.queue().launch( | |

| share=args.share, | |

| inbrowser=args.inbrowser, | |

| server_port=args.server_port, | |

| server_name=args.server_name, | |

| ) | |

| def main(): | |

| args = _get_args() | |

| model, tokenizer, config = _load_model_tokenizer(args) | |

| _launch_demo(args, model, tokenizer, config) | |

| if __name__ == '__main__': | |

| main() |

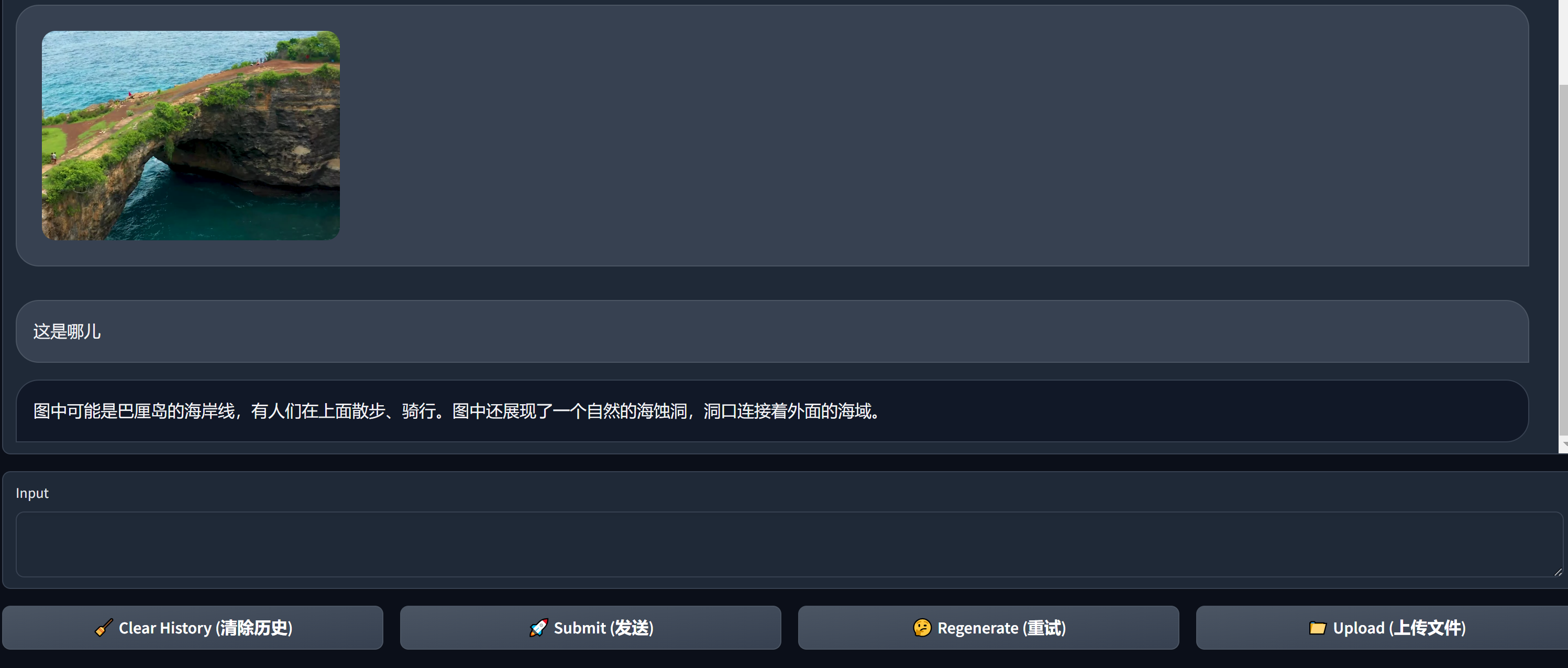

3. Qwen-VL 运行效果

Qwen-Vl 运行效果如下:

感觉有些还行,有些还是有问题。懒得截图了,就这样吧。

正文完